Causal Driven World Model

with Interactive Learning

Causal Foundation Model

A foundation model that doesn’t just observe the world —

it understands and acts through intervention and feedback.

We move from passive, correlation-trained LLMs to a causal-driven world model: a foundation model that doesn’t just observe, but understands and acts through intervention and feedback. The system learns a structured world model—a latent causal graph over entities, states, and actions—and closes the loop.

Architecturally, perception modules learn a structured world model; a planner selects low-risk, high-information interventions; a learning loop updates beliefs from interventional feedback. The result is a model that generalizes across shifts, plans beyond the training distribution, and explains its decisions.

Why This Matters

The current AI paradigm is data-hungry and correlation-bound: most public corpora are exhausted, and bigger models memorize associations without uncovering the mechanisms that produce them. A causal-driven world model breaks that ceiling. By acting to learn—designing interventions, gathering feedback, and updating a structured world model—we unlock:

Safety & governance

Reliability Under Shift

Scientific & Creative Acceleration

Efficiency & sustainability

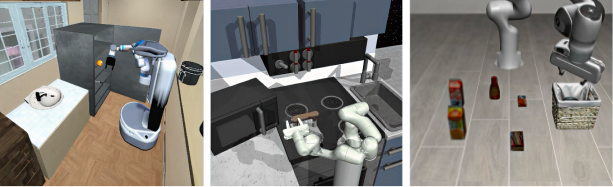

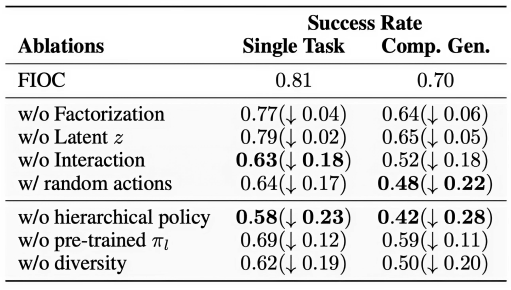

Learning Interactive and Disentangled

Object-Centric World Models for Reasoning, Planning and Control. Feng, Huang, et al. Under Submission.

FIOC: Explicitly models Causal Relations and Interactions among objects and agents

linkedin →

X →

© 2025 Abel.AI, Inc. All rights reserved.

Through interventions to

generate new, informative data

Interact with

its environment

02

Modelling cause-effect

relationships

Uncover how the

world actually works

01

To explore

“What-if” scenarios

Simulate

counterfactuals

03

Closing the loop between

understanding and action

Iteratively refine

its own world model

04